Bun 1.0: Unveiling the Ultimate Development Tool

Since its launch, Bun 1.0 has become the talk of town in the web development community. It is gaining popularity as an all-in-one tool for JavaScript and TypeScript development. If you haven’t used it yet and want to explore Bun 1.0 features, this post is for you. Before we jump into the Bun 1.0 features, let’s learn about it briefly. Overview of Bun and its Significance Bun is a renowned open-source bundler for JavaScript and TypeScript. Jarred Sumner is the key person behind the foundation of this JavaScript runtime. Unlike Node.js and Deno, the bundler uses JavaScriptCore as the JavaScript engine. Bun 1.0 was launched on September 8, 2023. It is a versatile tool to build, test, debug, and run JavaScript and TypeScript applications. Bun 1.0 is quite fast in comparison to Node.js and Deno. Let us uncover all the Bun 1.0 features one by one. Features of Bun 1.0 Universal Tool Bun 1.0 meets the requirements of both JavaScript and TypeScript developers. Whether you are working on a single-file project or developing a full-stack application, Bun provides an efficient development environment. Below are some features that make Bun 1.0 worth using: Bun supports quick command execution, thanks to npx. NPX is part of Bun. It eliminates the requirement of nodemon as it features a built-in watch mode. It is an ideal replacement for Node.js. Bun is capable of reading .env files. It means you don’t need any 3rd party configuration. You get support for various file formats such as .js, .ts, .cjs, .mjs., tsx, etc. Bun provides you with an integrated bundling solution. It replaces web pack, parcel, rollup, rebuild, etc. Bun 1.0 also features testing libraries that remove the requirement for jest and similar tools. Bun is an npm-compatible package manager. Therefore, it naturally reduces the need for yarn and npm. High Speed and Performance Speed and performance are other aspects of Bun impressing JavaScript developers. It lets you run your code at an excellent speed. Bun 1.0 is several times faster. You won’t need to use tools like yarn, npm, and pnpm. Bun takes about 0.36 seconds to compile a code. In the case of pnpm, compilation may take up to 6.44 seconds. With npm, code compilation takes 10.58 seconds, while Yarn takes 12.08 seconds for the same task. Compared to Node.js, Bun is about four times faster. Bun 1.0 provides top-notch performance, thanks to its advanced optimization technology and efficient code bundling. In addition, it minimizes the load times for web applications. As a result, it provides a better user experience. Built-in Support for JavaScript and TypeScript Bun 1.0 provides complete support for JavaScript and TypeScript. Developers can work with both languages without using any third-party transpilers. Bun makes it easy to set up your development process. You do not need to struggle with various tools. Bun handles everything so that you can focus on coding entirely. Hot Reloading Bun 1.0 allows you to see instant updates in applications as you make changes. All credit goes to hot reloading. Bun features built-in hot reloading that enhances the development process by providing real-time updates to code and configurations. As a result, you can quickly spot all issues and fix them. With Bun, you do not need Nodemon. It automatically refreshes the server when developers run TypeScript or JavaScript code. If you have been using npm rum, you can replace it with bun run. It will reduce command execution time by at least 150 milliseconds on every run. Installation Speed It can be frustrating and time-consuming to install development tools. Fortunately, Bun 1.0 supports lightning-fast installation that reduces the setup hassle. Bun uses a global module cache system to avoid redundant downloads from the npm registry. Consequently, it uses quick system calls, available in different operating systems. Compatibility Adaptability is one of the primary Bun 1.0 features that users appreciate. This open-source bundler can effortlessly integrate with well-known server frameworks such as Hono, Koa, and Express. Web developers also get support for applications built using full-stack frameworks, including Next.js, Nuxt, Astro, Vite, Remix, etc. In addition to this, Bun 1.0 is also compatible with ESM and CommonJS. It means you can use both of them together in the same file. This feature was missing in Node.js. Conclusion Bun 1.0 is an ideal choice for developers working on JavaScript and TypeScript. It has numerous built-in features, making it a game-changer in the ever-evolving web development era. The bundle ends your dependency on complex and slow fragmented tool chains. It won’t be wrong to mention that Bun has brought revolutionary changes in the development of JavaScript projects. So these are a few worth mentioning Bun 1.0 features.

Keras Core 3.0 — Pioneering the Next Frontier in Deep Learning APIs

In the dynamic landscape of artificial intelligence, where breakthroughs occur in rapid succession and the boundaries of what’s possible are constantly pushed, the Keras framework has emerged as a steadfast companion for machine learning practitioners and researchers. With the advent of Keras Core 3.0, the framework embarks on a transformative journey, poised to redefine the very essence of capabilities, performance, and adaptability, and solidify its position as a trailblazer in the realm of deep learning. This article delves into the evolution of Keras, highlights the remarkable features of version 3.0, and explores its compatibility with various backends. Understanding Keras — A Journey from Inception to Innovation Keras, born from the visionary mind of François Chollet in 2015, swiftly rose to prominence as a high-level neural networks API known for its intuitive design and unparalleled experimentation agility. Its initial incarnation and subsequent integration with TensorFlow marked a pivotal moment, propelling Keras into the limelight of machine learning tools. As the AI landscape evolved, Keras adapted in tandem, shaping itself to meet the diverse demands of an ever-expanding user community. Now, with the unveiling of Keras Core 3.0, this evolutionary saga culminates in a symphony of enhancements that not only elevate the framework’s capabilities but also redefine its role as an indispensable asset in the arsenal of AI practitioners. Redefining Possibilities — Unveiling Keras 3.0’s Game-Changing Features Embracing the Multi-Backend Landscape Keras 3.0 emerges as a trailblazer with its unprecedented support for multiple backends. While its roots are anchored in TensorFlow, this version casts a wider net, inviting frameworks like jAX and PyTorch into its fold. The result? A harmonious coexistence that empowers researchers and practitioners to wield their preferred framework without renouncing the prowess of Keras. Precision Perfected — Advanced Performance Optimization Keras Core 3.0 doubles down on performance optimization, seamlessly weaving techniques like mixed-precision training and distributed training into its fabric. The result is a turbocharged training process and maximized hardware resource utilization. These optimization strategies work behind the scenes, enabling users to focus on the art of model development and experimentation, confident that the framework is orchestrating the complex ballet beneath. Expanding the Horizons — A Flourishing Ecosystem The Keras ecosystem flourishes with renewed vigour in Keras 3.0. The framework’s enhanced support for KerasCV and KerasNLP, specialized libraries tailored for computer vision and natural language processing, empowers it to excel in these domains. This synergy doesn’t just streamline the development process; it equips users with an extensive toolkit to conquer the intricate challenges inherent in these fields. Uniting the Diverse — Cross-Framework Compatibility Keras Core 3.0 ushers in an era of harmony across deep learning frameworks. Models crafted in Keras effortlessly traverse the boundaries between TensorFlow, jAX, and PyTorch backends, reflecting a unification in an ecosystem historically divided. This seamless compatibility erases barriers, fostering an environment of collaboration and experimentation, where diverse tools coalesce to drive innovation. Evolution by Design — The Philosophy of Progressive Disclosure Keras 3.0 embodies the ethos of progressive disclosure, catering to both novices and seasoned practitioners. The API unfolds in a manner that facilitates the gentle onboarding of newcomers while gradually unveiling the advanced features craved by experts. This balanced approach ensures Keras remains accessible and indispensable, irrespective of users’ proficiency levels. A Stateless Symphony of Design — The Stateless API Paradigm The introduction of the stateless API marks a paradigm shift in Keras 3.0. Aligned with the trend of integrating functional programming concepts in deep learning, this design choice fosters modular architecture, encourages code reusability, and champions clean code organization. This leap not only elevates the development experience but also fortifies code maintenance and collaborative prowess. Navigating the Possibilities — Keras for TensorFlow, jAX, and PyTorch Embarking on the Voyage: Installation Embarking on the journey with Keras Core 3.0 is an effortless endeavour. Installation guides for each supported backend are readily available in the official documentation, providing users the freedom to opt for the backend that resonates with their ethos and project requisites. This adaptability cements Keras as an indispensable entity amid the ever-shifting currents of AI technology. For installation, $ pip install keras-core import keras_core as keras Aligning with the Core: Backend Configuration Configuring the backend is a seamless ritual, often requiring a mere few lines of code. This configuration determines the engine propelling Keras—be it TensorFlow, jAX, or PyTorch. This flexibility empowers users to fluidly transition between backends, paving the way for efficient exploration and experimentation. Run the following command for backend configuration: $ export KERAS_BACKEND="jax" $ python train.py Or $ KERAS_BACKEND=jax python train.py Mastery in Action: Integrating KerasCV and KerasNLP The integration of KerasCV and KerasNLP into Keras Core 3.0 paints a transformative landscape. KerasCV brings forth a symphony for computer vision tasks, providing dedicated APIs and pre-fabricated models for image classification, object detection, and segmentation. Meanwhile, KerasNLP empowers users to navigate the challenges of natural language processing with access to cutting-edge language models, tokenization tools, and sequence manipulation layers. And here is some KerasCV usage example: import keras_cv import keras_core as keras filepath = keras.utils.get_file(origin="https://i.imgur.com/gCNcJJI.jpg") image = np.array(keras.utils.load_img(filepath)) image_resized = ops.image.resize(image, (640, 640))[None, …] model = keras_cv.models.YOLOV8Detector.from_preset( "yolo_v8_m_pascalvoc", bounding_box_format="xywh", ) predictions = model.predict(image_resized) A Confluence of Innovation: In the ever-accelerating tapestry of deep learning, Keras Core 3.0 emerges as a beacon of innovation and adaptability. With its embrace of multiple backends, advanced performance optimization, amplified ecosystem, cross-framework harmony, philosophy of progressive disclosure, and the advent of the stateless API, Keras 3.0 redefines itself as the quintessential deep learning API. It resonates across the spectrum of users—novices venturing forth and experts charting the boundaries of possibility. As the grand symphony of deep learning unfolds, Keras Core 3.0 remains a steadfast companion, empowering developers to manifest their visions with unmatched finesse and precision.

NetDevOps — A Comprehensive Guide with Components and Obstacles

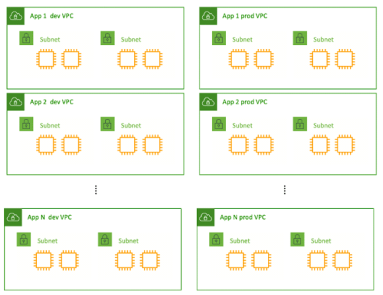

Considering the automation through Agile development processes, the software development industry has experienced a massive shift towards NetDevOps. The credit goes to its underlying network infrastructure offering network automation to fast-paced modern businesses. Since the non-DevOps approach hovers around tools, developers may experience a lack of traceability, testing, and collaboration. Here NetDevOps can help you cop with these limitations and eliminate security vulnerabilities while ensuring expected performance. Similarly, there’s a glut of things you need to know about NetDevOps if you’re looking to incorporate it into your development process. This guide will lead you to the various NetDevOps components and obstacles for a better understanding. What is NetDevOps and Why is it Worth Using? As the term describes itself, NetDevOps is a technical blend of Networking and DevOps. It streamlines the DevOps principles for the deployment and management of network services. If we dig deeper, NetDevOps apply CI/CD DevOps concepts to networking activities for faster delivery. In addition to this, its automated workflows bolster the abstraction, codification, and Infrastructure as Code (IaC) implementation. NetDevOps also eliminate the configuration drift to embed quality and resiliency within the network. In a nutshell, it improves agility by driving clear workflows aiding auditing, governance, and troubleshooting. Challenges You May Face During NetDevOps Development Risk Aversion One of the challenges that organizations may face during NetDevOps development is risk aversion. Many companies are hesitant to adopt new technologies and practices due to the fear of potential failures or disruptions to their existing network infrastructure. This risk aversion can hinder the adoption of NetDevOps methodologies, which emphasize automation, continuous integration, and continuous delivery. To address this challenge, organizations need to focus on building trust by demonstrating the benefits and success stories of NetDevOps implementation. Technical Debt Technical debt refers to the accumulated shortcuts, workarounds, and suboptimal code or configurations that result from rushed or incomplete implementation of network automation processes. This can lead to various issues, including increased complexity, reduced maintainability, and decreased scalability. To mitigate technical debt, organizations should prioritize code quality, conduct regular code reviews, and follow established best practices and coding standards. Implementing automated testing frameworks and leveraging continuous integration and delivery pipelines can help identify and address technical debt early in the development process. Skills Shortage NetDevOps development requires a unique set of skills that combine network engineering, software development, and automation expertise. However, finding individuals with a strong skill set in these areas can be challenging due to the shortage of qualified professionals. To address this issue, organizations can invest in training and upskilling their existing network and IT teams. This can include providing access to relevant courses, certifications, and hands-on training programs. Collaboration with external training providers or universities can also help bridge the skills gap. Documentation Effective documentation plays a crucial role in NetDevOps development, as it ensures that network configurations, automation workflows, and troubleshooting processes are well-documented and accessible to the team. However, maintaining up-to-date and comprehensive documentation can be challenging, especially when changes occur rapidly in dynamic network environments. Organizations can address this challenge by adopting documentation frameworks and tools that facilitate automated documentation generation. Version control systems, wiki platforms, and collaborative document editing tools can also help streamline the documentation process. Unstandardized Data NetDevOps development relies on gathering and analyzing network data to drive automation and decision-making processes. However, network data can be highly diverse and unstandardized, making it challenging to extract meaningful insights and build reliable automation workflows. Organizations should invest in data normalization and standardization techniques to ensure consistency and compatibility across different data sources. This can include using standardized data models, implementing data transformation pipelines, and leveraging data analytics tools for data cleansing and preprocessing. Tool Limitations NetDevOps development often requires the use of various tools and technologies, including network configuration management systems, automation frameworks, and orchestration platforms. However, tool limitations can arise, such as a lack of integration capabilities, limited scalability, or inadequate support for specific network devices or protocols. To overcome these challenges, organizations should thoroughly evaluate and choose tools that align with their specific requirements and network environment. They should also consider open-source solutions that offer flexibility and community support. Top NetDevOps Components Modularity Modularity is a key component of NetDevOps, enabling the creation of flexible and scalable network architectures. By breaking down network systems into modular components, organizations can easily adapt and scale their networks as per evolving requirements. Modularity facilitates the deployment of microservices, allowing for the independent development and deployment of specific network functionalities. This approach not only enhances agility but also simplifies troubleshooting and maintenance, as issues can be isolated to specific modules. For instance, using containerization technologies like Docker, network functions can be encapsulated within lightweight, portable containers, ensuring consistent behavior across different environments. Example 1 – Multiple applications in a single VPC network architecture Example 2 – Single application per VPC network architecture Cultural Changes Cultural changes play a crucial role in successfully implementing NetDevOps. Traditionally, network and operations teams operated in silos, with limited collaboration between them. However, NetDevOps encourages a cultural shift towards increased collaboration, communication, and shared responsibility. By fostering a DevOps culture, organizations can break down barriers between different teams, promoting a collaborative approach to network management. This cultural shift involves embracing shared goals, establishing cross-functional teams, and encouraging continuous learning and skill development. Automation and Infrastructure as Code Automation and Infrastructure as Code (IaC) are pivotal components of NetDevOps, enabling organizations to achieve faster and more efficient network deployments. Automation eliminates manual, error-prone tasks and accelerates the provisioning and configuration of network devices. Tools like Ansible, Puppet, or Chef enable the automation of network device configurations, ensuring consistency and reducing human errors. Infrastructure as Code allows network infrastructure to be defined and managed through machine-readable configuration files, promoting version control and reproducibility. Continuous Integration/Continuous Deployment Continuous Integration/Continuous Deployment (CI/CD) practices are integral to NetDevOps, enabling organizations to rapidly and reliably deploy network changes. CI/CD pipelines automate the process of integrating code changes, testing them, and deploying them to